Today I have been asked the same question twice, so here you go.

I’ve blogged about search a lot, but one important thing still needs to be covered. Once you are convinced that going with the “new” search is the right way to go, you may have to deal with its configuration. On a two or more server environment, without proper configuration, the search index may not be rebuilt after publishing.

The main reason for that is the way this functionality is architected. In a distributed system with at least one Content Management (CM) and one Content Delivery (CD) instance index rebuild works similarly to the way caching worked in pre 6.3 days. The CD instance maintains its own copy of search index and does not know anything that happened on the CM side (publishing).

Your job is to make sure it does.

First of all, as the documentation suggests, make sure you have the following:

0. Make sure that the application pool account has read/write/modify over the /data/indexes folder or other location where you have indexes stored (thanks for the hint, Joel).

1. HistoryEngine is enabled on the web database*

1: <database id="web"

2: <Engines.HistoryEngine.Storage>

3: <obj type="Sitecore.Data.$(database).$(database)HistoryStorage, Sitecore.Kernel">

4: <param connectionStringName="$(id)"/>

5: <EntryLifeTime>30.00:00:00</EntryLifeTime>

6: </obj>

7: </Engines.HistoryEngine.Storage>

8: …

9: </database>

You may also have a number of “web” databases configured, “stage-web”, “pub-web”, “prod-web”, etc. As the names of the databases may be different from one environment to another, you need to apply this to the “web” database you use to deliver content in production.

2. The update interval setting is not set to “00:00:00” as this disables the live index rebuild functionality completely:

<setting name="Indexing.UpdateInterval" value="00:05:00"/>

If this is set to the following default value, this means that the remote server will check if anything needs to be added into the index every 5 minutes. This should be taken into account. Everything may be working, but you may be experiencing the delay in the rebuild process, which may cause confusion. Feel free to adjust it to a shorter timeframe. Perfect timing depends on the environment, frequency of content change, etc. In my experience, I would not set it to anything less than 30 seconds.

3. Enable “Indexing.ServerSpecificProperties” in web.config:

<setting name="Indexing.ServerSpecificProperties" value="true"/>In most cases, you need this value to be set to “true”. Specifically, this is needed in the following cases which apply to most installations:

- more than one CD server in a web farm

- CM environment points to the same physical web database as the CD environment.

In a clustered CM environment this setting is overridden and set to “true” automatically due to the EventQueues functionality that has to be enabled in such case.

If this setting is set to “false” and you have one of the configurations mentioned above, the CD server(s) will never know that it needs to update the indexes.

After each index update operation, Sitecore writes a timestamp to the Properties table of the currently processed database. This helps the IndexingProvider, that is responsible for index update process, understand what items to pull out from the history table when doing next index update. With “Indexing.ServerSpecificProperties” set to “false", the timestamp is not unique to the environment, so you may be having an issue when CD is confused regarding what items to process from the history store.

The instance name can either be explicitly set in the web.config or created from a combination of the machine name + site name. This grants the uniqueness of the key within an environment.

4. Check your index configuration.

4.1 Your search index configuration in CD may be referencing the “master” database instead of “web”.

4.2 Check if the root the index is configured to be pointing to actually exists in the “web” database:

1: <search>

2: <configuration>

3: <indexes>

4: <index id="test" type="Sitecore.Search.Index, Sitecore.Kernel">

5: <param desc="name">$(id)</param>

6: <param desc="folder">test</param>

7: <Analyzer ref="search/analyzer" />

8: <locations hint="list:AddCrawler">

9: <master type="...">

10: <Database>master</Database>

11: <Root>/sitecore/content/test</Root>

<include hint="list:IncludeTemplate"> <residential>{71D42CF2-CE89-4030-9EB1-0065B35B78C4}</residential> <business>{ED9F466B-D436-4A3F-B22F-EA6E8097085D}</business> <industry>{78166FE4-EDFB-4B0D-A3ED-860AEB44CD40}</industry> </include>Otherwise only the last item is getting into the filter if you define it like this:

<include hint="list:IncludeTemplate"> <template>{71D42CF2-CE89-4030-9EB1-0065B35B78C4}</template> <template>{ED9F466B-D436-4A3F-B22F-EA6E8097085D}</template> <template>{78166FE4-EDFB-4B0D-A3ED-860AEB44CD40}</template> </include>If you went through all these steps and still can’t get the indexing to work, here is what you can do.

Since there are a few things that can go wrong, we need to rule out “live indexing” functionality that relies on history store and update intervals and the index configuration itself.

To find out whether your index is properly configured at all, follow these steps to run a full index rebuild process on the CD side.

1. Download this script and copy it to the /layouts folder of your CD instance.

2. Execute it in the browser by going to http://<your_site>/layouts/RebuildDatabaseCrawlers.aspx

3. Toggle the index you want to rebuild and hit rebuild.

This will launch a background process so there will be no immediate indication whether the index is rebuilt or not. I suggest looking into the log file to confirm this actually worked.

If you do not see your custom index in the list, this means that your index is not properly registered in the system. This is a configuration problem. Review your configuration files and make sure the index is there.

If after the index is fully rebuilt, you start getting hits and the search index contains expected number of documents (you can use either IndexViewer or Luke to confirm that), then the index itself is configured properly and what you need to make sure is that the “live indexing” portion works right.

In order to do that, follow these steps:

1. Login to CM instance.

2. Modify an item (change the title field for ex.) that you know is definitely included into the search index.

3. Save.

4. See if the item change got reflected within the index on the master/CM side.

5. Publish.

6. Verify that the item change got published and cache cleared.

7. Within SQL Management studio query the History table of the web database:

SELECT Category, Action, ItemId, ItemLanguage, ItemVersion, ItemPath, UserName, Created FROM [Sitecore_web].[dbo].[History] order by created desc

You should be able to see a few entries related to your item change.

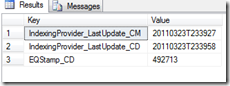

8. Now query the Properties table of the Web database:

SELECT [Key], [Value] FROM [Sitecore_web].[dbo].[Properties]

You should see two “IndexProvider” related entries for each of the environments.

Note that the actual key names could be different, depending on your configuration.

As previously indicated, these UTC based timestamps help IndexingProvider understand what items to pull out from the history table when doing next index update.

So naturally, the timestamp for the CD environment should be later than the one for CM.

If you do not see an entry for the CD environment, then something is wrong with the configuration. Double check the UpdateInterval setting, history table and index configuration.

9. Open up the most recent log file on CD and look for the following entries:

ManagedPoolThread #12 16:39:58 INFO Starting update of index for the database 'web' (1 pending).These entries indicate that the IndexingProvider was kicked in and processed the changed items (1 in this case). The item should be in the physical index file as document now.

ManagedPoolThread #12 16:39:58 INFO Update of index for the database 'web' done.

If you do not see these messages, then something is wrong with the actual crawler piece. Look out for any exceptions that appear in this timeframe. The DatabaseCrawler component may not be processing your items properly. So you may have to override it and step into the code to figure out what’s going on.

10. Finally, as a final checkup, take a deep look into the search index files themselves.

The following tools will help you browse the contents of the index and search:

If you get through this checklist and reading this, my hope is that your search is already working properly.

26 comments:

Alex, I'm really glad you wrote this post. I was thinking about writing some of these details down myself but I forgot a lot of the details. You went through these exact steps with me months ago to get the Advanced Database Crawler to work right in two CD environments. On our site that used your new tool we snagged some our your code on the RebuildDatabaseCrawlers.aspx.cs page and used that as a daily script to run to rebuild the indexes. Thanks again for the important details!

You are welcome!

Alex, after your recommendation we upgraded our development server from 6.0 to latest release and trying to implement web search based on Sitecore Lucene module, but there is puzzle I still trying to solve. Our all web pages sharing a lot of renderings to build different menus on pages, so when user execute web search with word existing in menu, it return many pages where search word is not part of content.

I build our new index with "ExcludeTemplate" but it does not solve problem.

I discovered some suggestion but I do not like it:

http://wiki.evident.nl/Sitecore Exclude content from Search Server crawler.ashx

Can you recommend some solution?

Hi Alex, we have a custom cache in our code that we would like to have cleared after the reindexing occurs, so that it will rebuild based on the newly indexed items. We were thinking of doing this first on the publish:end event, but that doesn't guarantee that the index has finished being rebuilt. Instead, we thought maybe we could somehow catch the event for when the index got finished being rebuilt, but I've had a hard time finding if this is possible.

What do you recommend?

Hi Liz,

Can you check the last post in the following thread? Looks like it's the same question.

http://sdn.sitecore.net/SDN5/Forum/ShowPost.aspx?PostID=40437

-alex

Alex,

what can I say? Thank you so much for taking the time to write this post. It's very very useful!

I have a question, though. I am trying to make things work on a QA environment (Sitecore 6.5 update 2) where I have one CM server and one CD server.

I can see that the timestamps on the indexes folders on both CM and CD change every minute (I set the refresh time to 1 minute) but when I run your SQL statement I see that the LastUpdate date on the CM changes, while the CD doesn't change.

These are the two dates I see right now in the DB Properties table:

IndexingProvider_LastUpdate_CM 20111219T145137

IndexingProvider_LastUpdate_CD 20111118T160052

I even tried to update manually the timestamp for the CD to match the one from CM, but it got reset to what you see here.

Looking in the logs I also noticed that I see, once in a while, that the system tries to rebuild the 'web' index, but it never gets to the end of it, probably because being just a QA environment, sitecore shuts down due to inactivity before the completion of the job. I do see the following line in the logs, though:

INFO Starting update of index for the database 'web' (16462 pending).

So, when I publish a new item it shows up in CM preview mode, but doesn't show up on the CD.

I have tried your RebuildDatabaseCrawlers.aspx script - which correctly shows all the indexes - but that didn't help either. It does run - I checked in the logs:

INFO Job started: RebuildSearchIndex

[...]

INFO Job ended: RebuildSearchIndex (units processed: 35988)

but even after a refresh of the cache, the new item does not show up on CD.

Do you have any ideas what should I be looking at next?

I did a line by line comparison of the web.config between QA and production and, beside the obvious differences due to the fact that production is still a Sitecore 6.2 installation, while QA is an upgrade to Sitecore 6.5), I didn't see any difference in regards to the indexes themselves.

I'd really appreciate if you could shed some light on this since I trust your expertise more than anyone else on Sitecore.

Thanks in advance and sorry for the long message!

FG

Hi Francesco,

One thing to try is to cleanup the history table on the "web" database by running "delete from history".

The volume of data may be too large for the IndexingManager to process.

After that, perform full rebuild via RebuildDatabaseCrawlers.aspx, publish and item and see if the timestamps get updated.

Email me AS at sc.net if this does not help.

-alex

Alex,

you surprise me one more time :)

I had noticed myself that the system was indeed slowly processing those 30k+ items in the history table - the proof of that being that the CD date in the properties table was slowly changing - and that the only reason why it never got to finish was because the "keepalive" URL was incorrectly setup, causing the system to shutdown after a certain period of inactivity. Someone has performed a full publish on this large site in QA for testing purposes and essentially brought the system to a halt, from an indexing perspective.

Like you said, I cleaned up the history table and now the Properties table looks great:

IndexingProvider_LastUpdate_CM 20111219T191756

IndexingProvider_LastUpdate_CD 20111219T192554

The logs look great as well:

ManagedPoolThread #5 14:25:55 INFO Starting update of index for the database 'web' (3 pending).

ManagedPoolThread #5 14:25:59 INFO Update of index for the database 'web' done.

And the newly published item got to the CD in matter of minutes.

Thanks again for your prompt and kind help!

Awesome, glad it did the trick!

I should update the blog post with this info ;-)

Hi Alex,

I have used your sitecore lucene shared source module and it was great...

But, now i'm facing a problem...

We have two servers for CM and CD..

when I create an item on CM server and publish it, it will not come to the CD server index...

When I check the running processers using "Publish Viewer" tool, "UpdateIndex_web" task is running continuously without stopping....

I follow your steps (delete history table and rebuild) but didn't solve the problem...

Can you please help me..

Thanks,

Chaturanga

Hi Chaturanga, Have you tried whacking the index files and running full index rebuild with the aspx page attached?

I am trying out the v2 crawler / searcher and I setup an index which contains about 2000+ items (including multiple versions, etc). I can query it but the time it takes to query and then retrieve 980 unique items is something like 2 mins. Any thoughts about what could be making it take so long?

David,

Are you returning skinny items or full items?

For skinny items this should take milliseconds.

Drop me an email: AS at sitecore.net

Alex, I have done the changes you mentioned in your blog , but could not fix the issue. i have one CM and two CD environments, CM works fine, but not CD environments. Could you suggest what can be done?

Hi Alex,

I'm working on website which has indexing configuration like you mentioned in 4.3.

Could you please explain it little bit brief like exactly how to configured when you index based on template ids.

Thanks,

Mrunal

Hello Alex,

have you ever seen an issue where the scheduled indexer doesn't start indexing the searchindex anymore?

Or when the searchindex seems to get corrupt after a few days?

I have already emptied the HistoryTable, lowered the EntryLifeTime on the web and web-frontend db to 2 days and rebuild the searchindex.

Every night there runs a scheduled import which removes the products (about 220) and recreates new items and does a publish. Sometimes this works OK for a few times but a lot of times the index doesn't remove it's old items and adds the new ones or the indexer doesn't update the index at all.

Any more clues?

@Martin: I'm facing same issue, I have 5 separate Index but when bulk update and publish done, it fails :(, Currently investigating why those other 5 search index is not firing on publish events like when you rebuild manually.

Also another confusion does database section contains index section or not?

If you use BulkUpdateContext, no entries will be registered in the history table, so the incremental index update won't work. This is expected behavior. You will have to do full rebuild. This is not related to ADC, it's how Sitecore indexing mechanisms work in 6.x.

@Alex, what do you mean by BulkUpdateContext ? Its that we are editing items in bulk( in loop) and publish them all as there parent item is same.

If not then could you please explain bit more.

Also in our case we edit each item by beginedit and endedit operation.

BulkUpdateContext is a Sitecore class. If you wrap your code inside BulkUpdateContext, no history entries will be written and incremental index update would not be triggered. Check if you use it in your code somewhere.

Otherwise, your BeginEdit/EndEdit calls should result in entries in History (easy to check) if it is enabled for the database where the update operation takes place.

If you see new entries in History table, it's probably the IndexingProvider that can't manage to read those to update the index.

>>> Also another confusion does database section contains index section or not?

Depends on whether you use Sitecore.Search or Sitecore.Data.Indexing namespace.

Sitecore.Search indexes are defined outside of the databases section.

BTW, Martin found the source of his problem: "In the meantime I noticed that the rootPath of my Index was pointing to the item Category-item that is removed and recreated during the import process. This confuses the Crawler and causes the crawler to stop working. After modifying my index rootPath to it's parent item, the strange behavior was gone :)"

Check maybe you have similar situation.

Hi Alex, is the zip file still available? I am having no luck downloading it from your site...

Alex, my search is working fine in CM whereas in CD I am getting an error. I tried rebuilding the search index several times. But the error persists.

I compared the search index files present in CM and CD, they are identical. I suspect whether my root index defined is present in we database. Can you please help me in verifying that?

Thanks in advance...

hi alex,

We have a multiserver environment.

1 - cm server and 2- CD server.

We made all of the changes as you mentioned in this post .The index do rebuild for one of the cd server as soon as we publish the blog but on other cd server it is not rebuilding the index.

Hi Venkat,

Have you done full binary beyond compare on the whole webroot between two CD servers? Any difference?

Another thought - check if the server time is in sync on both servers.

-alex

Post a Comment